AI Glossary

-

RAG - Retrieval-Augmented Generation

An LLM knows a lot of things. But nothing about recent events, your companies internal help articles, your personal files like resumes etc.

A RAG can be broken down into 👇

Retrieval - organize new information in vector format that is easy to run searches (semantic search)

augmented - for any user query, identify relevant chunks of information from the vector-store

generation - incorporate the newly retrieved information into a response to the user query

Technically, an LLM is only needed for the generation step, while vector embeddings and semantic search do the heavy-lifting in a RAG.

-

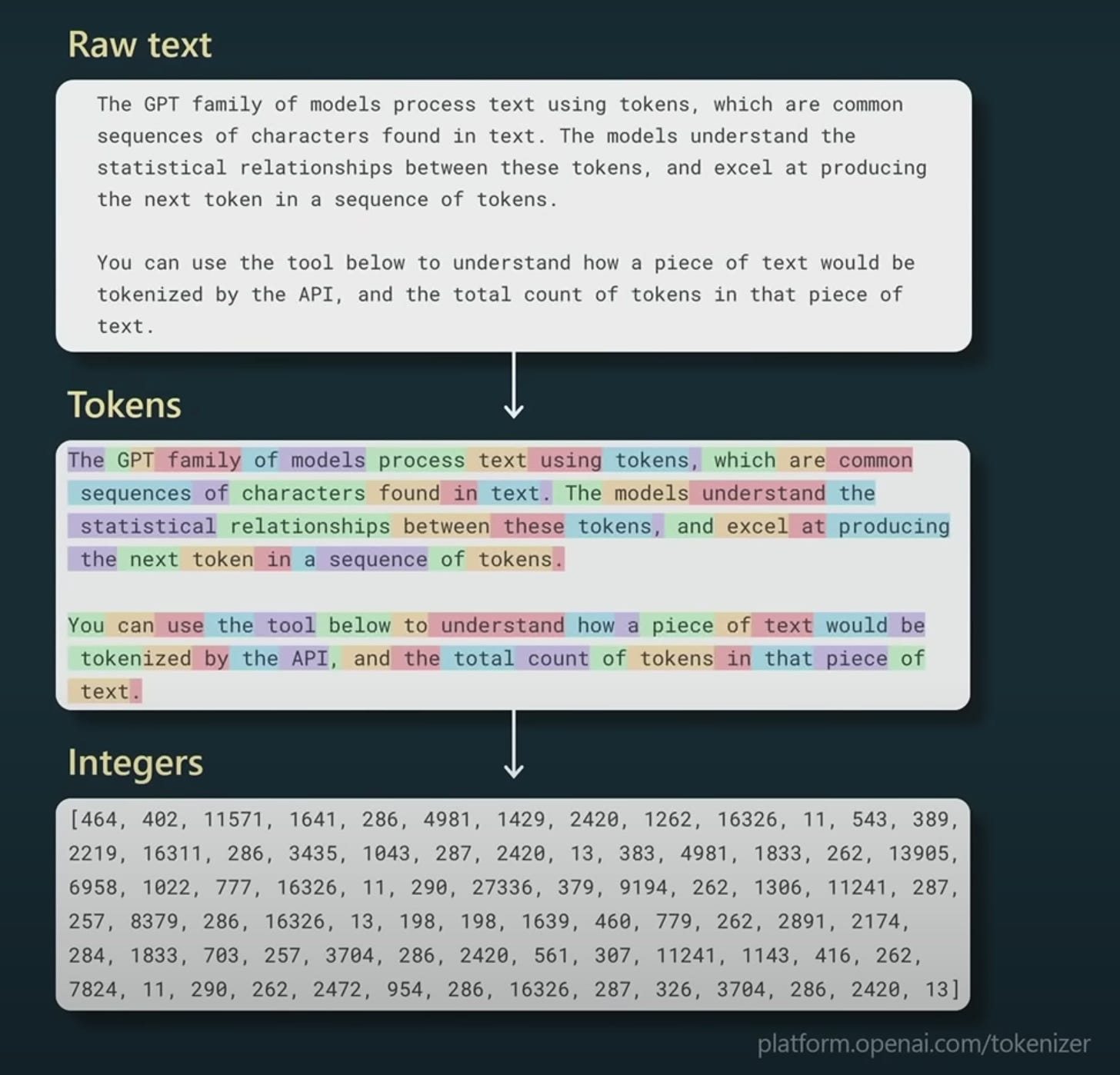

Tokenization allows converting text inputs to a sequence of numbers understandable by the AI Model.

The mapping from each individual text chunk, i.e. token to the number is determined by the tokenization algorithm.

-

Language models (starting GPT2) are trained on large bodies of text in a ‘self-supervised approach’ which is fancy term for next-word-prediction.

“The quick brown fox jumps over the lazy ____.”

is a next word prediction example in the wild.

This means, these models are, by nature, trying to complete writing text that your started. The mind-blowing thing is how they also learn logic, creativity and common sense in this process.

With that context, prompt engineering is giving the LLM sufficient context such that it can complete the text we provided in the prompt, thereby completing the “task”.

For example, if you have seen prompts begin like below, they are engineering the prompt to give the LLM the right context for their task.

“You are an expert sales copywriter …

-

RLHF - Reinforcement Learning with Human Feedback

A base-model itself is “just” a text-completion model that can generate coherent and creative text, but doesn’t quite know how to follow instructions… as a good assistant (like ChatGPT) would.

With some assistance from human feedback, we can steer the model towards outputs that is directly following the instruction.

RLHF is a step in the fine-tuning stage where human-feedback on the base model’s text-completion outputs are incorporated back into model so it can learn to only generate the high quality text outputs.

-

In Autoregressive models, like GPTs, the outputs (tokens) are generated sequentially, i.e. token by token, and each token's prediction conditioned on the tokens that have come before it. This approach enables the model to capture intricate patterns in language but comes with the trade-off of increased computational complexity.

For example, consider the sentence: "The quick brown fox jumps over the lazy dog."

In an autoregressive model, the process might look like this:

-

"The" -> model generates "quick"

-

"The quick" -> model generates "brown"

-

"The quick brown" -> model generates "fox" ...and so on.

Non-Autoregressive models generate all output tokens in parallel, without strict sequential dependence. This can lead to faster generation but might sacrifice some level of context and coherence.

For example, BERT (Bidirectional Encoder Representations from Transformers) employs a bidirectional context modeling approach, which means that each token is influenced by both tokens to its left and tokens to its right in the input sequence. This is in contrast to autoregressive models that are only influenced by tokens to their left.

In a non-autoregressive model using the Masked Token Prediction approach, the process might look like this:

-

"The quick [MASK] jumps over the lazy [MASK]."

-

Model predicts the masked tokens in parallel: "The quick brown jumps over the lazy dog."

In this example, the masked tokens are all predicted simultaneously, leveraging the contextual information present in the input sentence. This parallelism can lead to faster generation compared to autoregressive models, where each token is generated based on the preceding ones.

-

-

Parameter-efficient fine-tuning

LLMs have billions of parameters that need tweaking during the fine-tuning step, which can be expensive to fully realize. So, on the bleeding edge of LLM research, called Parameter efficient fine-tuning, people are experimenting with reducing the number of parameters that needs to be updated, while still achieving the same quality of resulting models.

For example, low-rank adaptation (LoRA) is a PEFT technique that has become especially popular among open-source language models. The idea behind LoRA is that instead of updating all parameters of the base model to fine-tune to a specific task, there is a low-dimension matrix that can represent the space of the downstream task with very high accuracy.

Fine-tuning with LoRA trains this low-rank matrix instead of updating the parameters of the main LLM. The parameter weights of the LoRA model are then integrated into the main LLM or added to it during inference. LoRA can cut the costs of fine-tuning by up to 98 percent.

-

Pre-training an LLM is like a toddler. Only the Meta’s and OpenAI’s of the world are resourced enough (time and money) to create these. Pre-trained LLMs (or base models) have a lot of world knowledge baked into it considering the entire internet is thrown at it, but they aren’t good at following instructions.

Fine-tuning a pre-trained model is where we teach it a specific task. The model builds on its strong foundation of knowledge and learns a narrow task - like teaching it to decode legal jargon.

Fine-tuning needs a significant number of training examples showing it inputs-output pairs so it learns the task effectively. Understandably, this is time-consuming to create the dataset.

Instruction-tuning is a newer approach where we draft a set of instructions that describe how to perform a task.

Often, combining fine-tuning and instruction tuning results in more powerful and accurate models, by effectively teaching it a new domain followed by specific instruction-following capability.

-

In-context Learning is the technical (fancy) term for what we all do with the ChatGPT web app when we task it with doing things for us, essentially boiling down to prompt engineering. It could be zero-shot learning with just the instructions or few-shot learning where we include a few example to further specify the objective.

It is termed ‘in-context’ as we are specifying the task and examples within it’s context, via the prompt. In doing so, the model is learning about the new task without having to change any of its parameters as is the case with pre-training or fine-tuning.

-

Precision in the context of LLMs and neural networks refers to the format and size of the numbers used to represent the weights in the networks.

By default, these weights are stored in a 32-bit floating-point format (float32). Lowering precision, for example to a 16-bit floating point format (float16), reduces the memory required to store these weights, which can be beneficial in scenarios with memory constraints. However, this comes at the cost of accuracy due to the accumulation of rounding errors. The trade-off between precision and memory management can be navigated by using mixed precision, which employs different data types in different parts of the network to balance the needs of precision and memory efficiency.

-

Quantization is a technique that further reduces the precision of the model weights, but in a way that's more structured than merely dropping precision. It calculates a quantization factor to scale the weights to lower precision, even to integer values. This process transforms a continuous space (like float32) into a discrete one, creating 'bins' to categorize the weights. The denser regions of the distribution get smaller bins (greater resolution), while the tails get lower resolution.

This technique can be particularly beneficial for deploying models on hardware with limited resources or for speeding up inference. Through quantization, the weights are mapped to lower precision in such a way as to minimize the loss of accuracy, making it a powerful tool for optimizing models for deployment in resource-constrained environments.

-

Gradient accumulation in training neural networks addresses the challenge of limited batch sizes, especially on smaller hardware.

Typically, larger batch sizes help smooth out the training process by reducing the variance and noise in the optimization steps, but they require more memory. When restricted to smaller batch sizes, the training process can resemble stochastic gradient descent, which is more erratic.

Gradient accumulation circumvents this by simulating larger batch sizes without the extra memory demand. During training, instead of updating the model parameters after every backward pass, the gradients are stored in a buffer. After several forward and backward passes, the accumulated gradients are summed together to perform a single, larger update on the model parameters. This technique essentially emulates the effect of training with a larger batch size, smoothing out the training process and potentially leading to better model convergence without the additional memory overhead.

-

LoRA is a Parameter Efficient Finetuning technique which allows fine-tuning LLMs by adjusting a subset of weights rather than altering the entire weight matrix.

Here, the change in model weights (delta W, or ΔW) are re-parametrized into a lower dimension matrices which can be trained to adapt to the new data while keeping the overall number of changes low. The original weight matrix remains frozen and doesn’t receive any further adjustments. To produce the final results, both the original and the adapted weights are combined.

Lora approach has a number of advantages:

-

LoRA makes fine-tuning more efficient by drastically reducing the number of trainable parameters.

-

Performance of models fine-tuned using LoRA is comparable to the performance of fully fine-tuned models.

-

LoRA does not add any inference latency because adapter weights can be merged with the base model.

-

-

QLoRA combines Quantization with LoRA to reduce the hardware requirements even further. The 2023 QLORA paper by Dettmers et al. (Original paper) used 4-bit quantized model weights instead of the original model weights, as well as LoRA on the attention layers.

As per the paper, the QLoRA finetuning approach reduces memory usage enough to finetune a 65B parameter model on a single 48GB GPU while preserving full 16-bit finetuning task performance.

-

When a language model generates incorrect or fabricated information, often due to gaps in its training.

-

Knowledge encoded in the weights of a language model derived from its training data.

-

In RAG, this refers to retrieving information stored in external documents and not encoded within the model's parameters.

-

Dense Passage Retrieval (DPR) System

A key component of RAG responsible for retrieving information by converting user queries and external documents into dense embeddings and returning the top K most relevant ones.

-

High-dimensional vectors representing words, sentences, or documents, capturing their semantic and contextual meanings.

-

Cosine Similarity, Dot Product, Manhattan Distance

Methods used in DPR to compare query embeddings with document embeddings to find the most relevant documents.

-

Specialized databases optimized for quick retrieval of relevant documents, where these documents are stored as embeddings.